SELECT [tips tricks and commentary] FROM [my experiences] WHERE subject IN ('database', 'data warehouse', 'business intelligence')

Wednesday, December 21, 2011

TFS 2010 Can't Convert Branch to Folder

I'm not sure if it's true but a colleague of mine told me that Microsoft purposely did this to help avoid accidental conversion of branches to folders by making it harder to get to the command. It also helps make it very confusing and frustrating - IMHO.

Thursday, December 15, 2011

SQL Server 2008 R2 Master Data Services: A Review

- Data modeling (entities, attributes, hierarchies, etc.)

- Data browsing

- Manual data maintenance (add, edit, delete) via a modern UI

- Bulk import and export

- Data and Model versioning

- Audit capabilities (who changed what when)

- Business rules for data validation

- Workflow based data validation

- Ability to integrate (both ways) with other systems

- Fine-grained security

Purpose

The purpose of MDS is to provide a platform for the creation, maintenance, and integration of high quality master data throughout an organization.

Architecture

Database

Web Service

All MDS functionality can be executed via the IIS hosted, WCF-based web service named Service. It can be used to create a custom UI and/or integrate it with existing and/or new applications.

.NET API

Although using the web service directly is recommended, MDS provides three .NET assemblies (Microsoft.MasterDataServices.Core, Microsoft.MasterDataServices.Deployment, Microsoft.MasterDataServices.Services). These are typically used for administrative functions such as creating and deploying update and deployment packages and cloning and deleting models.

Generate Insert Statements for SQL Server

It turns out Microsoft provides a tool to do this for us with ease. It is called the Database Publishing Wizard. This tool can script both DDL and DML. The actual executable is named SqlPubWiz.exe and can be found in a path similar to C:\Program Files (x86)\Microsoft SQL Server\90\Tools\Publishing\1.4.

Give it a shot. It's a nice little tool to have in the toolbox.

BIDS (Visual Studio 2008 Shell) with TFS 2010

Wednesday, December 14, 2011

SSDT vs. VSDB

Dimensional Modeling Skills Assessment

The assessment consists of business requirements, modeling requirements, an OLTP normalized, physical data model and some questions. The candidate is asked to review the requirements and data model and create a dimensional model that will serve as the basis for a data warehouse. There are also some follow-up questions.

The current version of the assessment asks for a dimensional model but has some questions that cross over into the physical world and assumes a SQL Server environment. It can probably be improved or at least made applicable to more organizations by making it more generic. However the position I created it for requires some knowledge of SQL Server so I incorporated it into the test.

Below is the problem statement and the physical OLTP data model. You can download the entire assessment here. Please e-mail me at david@elish.net if you'd like the answers.

Wednesday, November 16, 2011

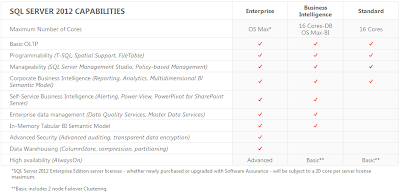

SQL Server 2012 Editions

Tuesday, November 15, 2011

The Excel Connection Manager is not supported in the 64-bit version of SSIS

- SSIS Error Code DTS_E_CANNOTACQUIRECONNECTIONFROMCONNECTIONMANAGER. The AcquireConnection method call to the connection manager "YourConnectionMgrName" failed with error code 0xC00F9304. There may be error messages posted before this with more information on why the AcquireConnection method call failed.

- [Connection manager "YourConnectionMgrName" Error: SSIS Error Code DTS_E_OLEDB_EXCEL_NOT_SUPPORTED: The Excel Connection Manager is not supported in the 64-bit version of SSIS, as no OLE DB provider is available.

Overwrite Excel File in SSIS: Workaround for Excel Connection Manager

Current File Name: Extract.xls

Historical File Name: Extract_YYYYMMDD_MMSS.xls

I figured this would be easy. The first thing I did was look for the OverwriteFile or ReplaceFileproperty. What I found was that the only non-connection specific property the Excel Connection Manager exposed was FirstRowHasColumnName. At that point I figured I would experiment and find out what would happen if I tried to write to a pre-existing file. The result was that the data being written to the file was simply appended to the pre-existing data in the file. Definitely not the behavior I was looking for.

I then hit "the Google" as George W. so fondly calls it. I found solutions that proposed deleting data from worksheet(s), deleting worksheets, and creating new worksheets using the Jet 4.0 SQL syntax to "DROP" and "CREATE" tables. In Jet/Excel terms, a table is an Excel worksheet. I found that the DROP didn't actually delete the worksheet but deleted rows from the worksheet. This could have worked except that the next time the worksheet is written to, the new rows start after the last row of the previously deleted data, leaving a bunch of empty rows in the beginning of the worksheet. This approach did not cut the mustard. So ended my search for the wheel I was attempting to not reinvent.

The approach I came up with does the following.

- Creates a shell (only the headers) Excel file with the name Extract_wip.xls.

- Uses a Data Flow to populate the wip Excel file.

- Copies the wip file to an Archive folder but renames to Extract_YYYYMMDD_MMSS.xls.

- Renames the wip file to Extract.xls, overwriting the previously created Extract.xls files (if one exists).

Monday, October 31, 2011

SQL Server Default Backup Location

Friday, October 21, 2011

The Future of Visual Studio Database Projects

- Code navigation (Go to Definition, Find All References)

- Enhanced code analysis and design time validation

- Table designer similar to SSMS

- Connected (live database) and disconnected (declarative) development

- LocalDB - SQLExpress without the need to explicitly install any SQL Server instances

- Entity Framework integration for a much more tightly integrated development experience between the data and application layers

- Support for CLR objects

- Support for SQL Server 2012 and SQL Azure databases (both support 2005, 2008, and 2008 R2)

- Integration of SSIS, SSRS, and SSAS projects into the next edition of Visual Studio (currently Visual Studio 2011)

Wednesday, October 12, 2011

SSIS Configuration File (.dtsconfig) Behavior (relative path issue)

Monday, September 12, 2011

OLE DB Destination or SQL Server Destination

Wednesday, June 22, 2011

SQL Bulk Copy

I got a new set of files from a new business unit that we were bringing into our data warehouse solution. Of their 20 files 14 loaded without issue and the remaining 6 failed with an error similar to the one below.

System.Reflection.TargetInvocationException: Exception has been thrown by the target of an invocation. ---> System.InvalidOperationException: The given value of type String from the data source cannot be converted to type int of the specified target column.

I kept going back to the developers that created the extract process and telling them that they were doing something wrong with their encoding or delimiters but neither us nor them could actually find the issue. Until now.

Turns out the issue is related to SQL Bulk Copy, numeric columns, and null values. Apparently SQL Bulk Copy does not, by default, keep nulls. Instead, it converts them to empty strings. Well, as I'm sure you know, SQL Server doesn't like it when you try to load empty strings, or any strings for that matter, into a numerically typed column.

There are a couple of solutions.

- Make sure to replace all nulls with zeros when created flat files with numeric data that will be consumed by BCP.

- Use the KeepNulls option when executing BCP. In my SSIS solution I changed my SqlBulkCopy object creation to look like this . . .

Tuesday, June 14, 2011

Data Warehouse QA Technical Screen Questions

So that I didn't sound like a bumbling fool when I made the call I put together a list of questions. It's by no means exhaustive but I figured it could be helpful to some . . .

Monday, March 28, 2011

A One-Package, Generic SSIS Staging Process

I recently worked on a project that was flat file and staging intensive. By intensive I mean we had essentially 1.5 ETL developers and over 50 flat files to stage. We also had a very aggressive timeline. Two data marts with source data coming from 5 totally disparate and disconnected (hence the flat files) subsidiaries/source systems all over the world (think currency conversion, translation, master data management, etc.) and only three and a half months to get it done.

Was I going to create a package to stage each flat file? Ummm . . . no! So what was I to do? I was going to create a single, metadata driven package that would loop through a data set and populate my staging tables one by one. Below is a screenshot of all the components necessary to make this work. There is no Data Flow.

Before going into the details there are a few things to keep in mind.

- This solution is based on text files. It can easily be tailored to read from relational sources and also non-relational sources with a little more tweaking.

- What makes this design possible is a Script Task that alleviates the need for custom data flows for each extract file/table to staged.

- The solution assumes that the staging tables can hold more than one day's/load's worth of data and therefore adds an ExtractFileID column to each staging table and to the source data as it is being loaded.

- For the script task to work as-is it is necessary to create a staging table for each data source that will be staged. Each staging table must meet the following criteria:

- The staging table must contain column names that match the data source column names exactly.

- The staging table columns must have data types that are appropriate for the incoming data. If they are character data types they must be large enough that they will not truncate any incoming data.

rowCount += 1

Throw ex