It is now possible to use the Team Foundation Service Preview (Microsoft hosted TFS) with Visual Studio 2008 and BIDS (VS 2008 Shell).

1) Install Visual Studio Team System 2008 Team Explorer http://www.microsoft.com/en-us/download/details.aspx?id=16338.

2) Install Visual Studio 2008 SP1 even if it is has previously been installed http://www.microsoft.com/en-us/download/details.aspx?id=10986.

3) Install the Visual Studio 2008 SP1 Compatibility GDR for Visual Studio 2012 Team Foundation Server and Team Foundation Service Preview http://www.microsoft.com/en-us/download/details.aspx?id=29983.

4) Add your server (assuming you've already signed up for TFS preview) using the following URL: https://<yoursite>.visualstudio.com/defaultcollection.

If you receive an error stating:

TF31002 Unable to connect to this Team Foundation Server...

Possible reasons for this failure include:

-The Team Foundation Server name, port number, or protocol is incorrect.

-The Team Foundation Server is offline.

-Password is expired or incorrect.

MAKE SURE YOU ADD /defaultcollection to the end of your URL.

SELECT [tips tricks and commentary] FROM [my experiences] WHERE subject IN ('database', 'data warehouse', 'business intelligence')

Monday, November 26, 2012

Wednesday, October 3, 2012

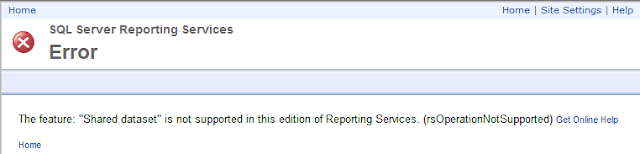

The feature: "Shared dataset" is not supported in this edition of Reporting Services. (rsOperationNotSupported)

Ouch. I was recently working on a project that started with SQL Server 2008 R2 Standard Edition and eventually ended up with SQL Server 2012 Web Edition in a hosted environment. When I attempted to stand up a staging environment on the 2012 Web Edition instance I received the following error.

The feature: "Shared dataset" is not supported in this edition of Reporting Services. (rsOperationNotSupported)

I came to find out that the "Shared component library" feature of SSRS is only available in Standard Edition and up. I have to admit that before we moved from 2008 R2 to 2012 I reviewed the Features Supported by the Editions of SQL Server 2012 MSDN page and didn't realize that the Shared Dataset feature was a subset of the Shared component library feature. It appears that this feature is also sometimes referred to as the "Report Part Gallery" as can be seen on the Programming Features for Reporting Services Editions page, also on MSDN.

Monday, August 27, 2012

Publish SSDT Projects Without Visual Studio Using SqlPackage.exe

If you'd like to publish your SSDT database project but don't have access to your target database from the machine you run Visual Studio (with SSDT) and/or you don't have Visual Studio installed on your target server you can still publish your database by using SqlPackage.exe.

For those familiar with Visual Studio Database Projects (VSDB), SqlPackage.exe is the replacement for VSDBCMD.exe

Microsoft provides a WPI (web platform installer) for the Microsoft SQL Server 2012 Data-Tier Application Framework which lays down everything required to use the SqlPackage command line utility. You can also install the individual components from the Microsoft SQL Server 2012 Feature Pack if you prefer. They consist of the following:

For those familiar with Visual Studio Database Projects (VSDB), SqlPackage.exe is the replacement for VSDBCMD.exe

Microsoft provides a WPI (web platform installer) for the Microsoft SQL Server 2012 Data-Tier Application Framework which lays down everything required to use the SqlPackage command line utility. You can also install the individual components from the Microsoft SQL Server 2012 Feature Pack if you prefer. They consist of the following:

- dacframework.msi

- SQLDOM.msi

- SQLLS.msi

- SQLSysClrTypes.msi

Once everything is installed you should be able to find SqlPackage.exe in a location similar to "C:\Program Files (x86)\Microsoft SQL Server\110\DAC\bin."

Below is a basic sample of how to execute SqlPackage.exe.

C:\Program Files (x86)\Microsoft SQL Server\110\DAC\bin\sqlpackage.

For an exhaustive list of available parameters see MSDN.

Wednesday, August 22, 2012

SSDT: unresolved reference to object [dbo].[sp_executesql].

Procedure: Some_Procedure has an unresolved reference to object [dbo].[sp_executesql].

Look familiar? There's a simple solution. We just need to add a reference to the master database.

1) Right-click the References folder in Solution Explorer.

1) Right-click the References folder in Solution Explorer.

2) Select the "master" System database.

3) Voila. The reference appears under the folder and the warning goes away.

Tuesday, August 14, 2012

Creating a CLR Function in SSDT

Creating a CLR function in SSDT (SQL Server Data Tools) is not very difficult but if you've never done it before you might be wondering where to start. Here goes...

Enabling CLR

First things first. While not directly related to SSDT it is necessary to enable CLR on your SQL Server instance since whatever code you end up writing won't be able to execute until this is done. All we have to do is set the clr enabled configuration to 1. See below.

It is necessary to tell your SSDT project which version of the .NET Framework to use. If you're running SQL Server 2008 R2 you can use .NET Framework 3.5 or lower. SQL Server 2012 support .NET Framework 4.0. To get to the screen below, right click your project in the Solution Explorer and select Properties and then click the SQLCLR tab on the left-hand size. Set your Target framework appropriately.

Add a Function to the Project

Right-click within the project and select Add > New Item...

Choose SQL CLR C# User Defined Function to add a function to the project.

Define the Function

Below is a sample function from MSDN. Obviously you can create a function to do whatever you're trying to accomplish.

Publish

Publish your SSDT project as you normally would.

SSDT makes database development and deployment a breeze but sometimes the approach can be less than intuitive. If you're used to creating CLR objects manually you might have been wondering how to create the assembly and the T-SQL reference to the CLR object's method. SSDT is kind enough to do this work for us.

If you look at Object Explorer you'll see the new assembly (DBProj) and the new dbo.validatePhone function.

If you further explore the dbo.validatePhone function you'll see that it is a wrapper for your CLR function.

Use it

Conclusion

CLR objects can be as complex as the code that you write but SSDT makes their development and deployment easier. Hopefully this post shows you just how easy it is.

Enabling CLR

First things first. While not directly related to SSDT it is necessary to enable CLR on your SQL Server instance since whatever code you end up writing won't be able to execute until this is done. All we have to do is set the clr enabled configuration to 1. See below.

sp_configure 'show advanced options', 1; GO RECONFIGURE; GO sp_configure 'clr enabled', 1; GO RECONFIGURE; GO

SSDT Project Properties

It is necessary to tell your SSDT project which version of the .NET Framework to use. If you're running SQL Server 2008 R2 you can use .NET Framework 3.5 or lower. SQL Server 2012 support .NET Framework 4.0. To get to the screen below, right click your project in the Solution Explorer and select Properties and then click the SQLCLR tab on the left-hand size. Set your Target framework appropriately.

Add a Function to the Project

Right-click within the project and select Add > New Item...

Define the Function

Below is a sample function from MSDN. Obviously you can create a function to do whatever you're trying to accomplish.

Publish

Publish your SSDT project as you normally would.

SSDT makes database development and deployment a breeze but sometimes the approach can be less than intuitive. If you're used to creating CLR objects manually you might have been wondering how to create the assembly and the T-SQL reference to the CLR object's method. SSDT is kind enough to do this work for us.

If you look at Object Explorer you'll see the new assembly (DBProj) and the new dbo.validatePhone function.

If you further explore the dbo.validatePhone function you'll see that it is a wrapper for your CLR function.

Simple as that.

Conclusion

CLR objects can be as complex as the code that you write but SSDT makes their development and deployment easier. Hopefully this post shows you just how easy it is.

Friday, August 10, 2012

SSIS Event Handlers: Behavior In Package Hierarchy

I recently stumbled onto what seemed to be an odd

performance problem in an SSIS project I was working on. The problem was that I

had a number of straightforward packages that ran extremely fast but sometimes,

without explanation, ran really slow.

Luckily the project included a robust logging system that

provided, among other things, the execution duration of every package and

Control Flow task. The logs showed that

the Data Flows in the packages in question were running in 10 to 20 seconds yet

the packages were at times taking upwards of 20 minutes. Furthermore, I found that when called on their own, each package did not run much longer than its single data flow, yet when run as a child of another package (via an execute package task) the execution duration more than doubled. In one example I had a package that ran in 10 seconds on its own, 23 seconds as a child, 56 seconds as a child 3 levels deep, and almost 3 minutes when run as a child four levels deep. In that same example the Data Flow took only 7 seconds. So why was the package so slow when run as a child of one or more packages?

Time for SQL Server Profiler.

Time for SQL Server Profiler.

The logging mechanisms in place were stored procedures being

called by Execute SQL tasks and were being passed things like a batch Id,

Package Id, Source Name, Source GUID, Start Time, End Time, etc. Once I began profiling it was clear that there were way too many Pre and Post execute

logging stored procedure calls.

Let me take a step back and explain the project/package

design. The project contained about 50 SSIS packages, some of which were being

called by Execute Package tasks within the project. For example, there was a

Master package which called some staging packages and some staging packages

that called lower level staging packages, and those in turn called some generic logging packages. There were as many as four packages

in any given package execution hierarchy. In each package, regardless of where in the

package hierarchy it fell, there were four event handlers:

- OnError: Handled exceptions that were then logged to the log database.

- OnVariableValueChanged: Handled the change in those variables we were interested in and logged to the log database.

- OnPreExecute: Handled the logging of the pre-execution data (e.g. start time, source name, etc.) for all package tasks.

- OnPostExecute: Handled the logging of post-execution data (e.g. end time) for all package tasks.

Getting back to what I was seeing in Profiler (many calls to

the Pre and Post execute logging stored procedures), it only made sense that

the OnPreExecute and OnPostExecute event handlers were being fired each time

the logging stored procedures were being called. So why would those event

handlers fire so many times?

I started to research event handlers propagation to

understand what happens in SSIS when we have package level event handlers and a

package design in which packages call other packages. I quickly ran into Jamie

Thomson’s “SSIS:

Understanding event propagation” blog post. In his post he talks about

three things:

- Events being caught by more than one event handler.

- Event handlers firing events.

- The System::Propagate variable.

Numbers 1 and 2 were what I was most interested in. Number 3

is something I’ve written about in the past in a blog post title “SSIS

Foreach Loop Container: Continue on Error” when explaining how to continue

a Foreach Loop container when a child task fails.

I took Jamie’s example and modified it to fit my situation

which was a four package hierarchy with a single Control Flow task (empty

script task just to get the event handler to fire) in the lowest level package

and an OnPostExecute event handler in all packages. Instead of an empty Control

Flow task in the parent packages, an Execute Package task was used to call the

package one level down (e.g. Package_Level2 called Package_Level1,

PacakageLevel3 called Package_Level2, etc.). Screen shots below depict the

design.

Package_Level1 OnPostExecute Event Handler

Package_Level2 Control Flow w/ Execute Package Edit Dialog

When running this sample package(s) I found that each

subsequent level in the hierarchy more than doubles the number of events fired.

When running Package_Level1 the OnPostExecute event handler is executed twice.

Once for the Level1_EmptyControlFlowTask task and then again for the OnPostExecute

of the package itself. When running

Package_Level2 the OnPostExecute event handler is fired a total of 8 times. See

the table below. Package_Level1 bubbles up the Level1_EventHandler (twice), the

Level1_EmptyControlFlowTask, and the Package_Level1 OnPostExecute events to the

Package_Level2 event handler. In addition to those 4 events we still have the

original two events we say when executing Package_Level1 on its own. And, we

have the Package_Level2 OnPostExecute events for the Level2_ExecuteChildPackage

(call to execute Pacakge_Level1 package) and the Package_Level2 package

execution.

Sequence

|

Event Handler Package

|

Task

|

Event

|

1

|

Package_Level1

|

Level1_EmptyControlFlowTask

|

OnPostExecute

|

2

|

Package_Level2

|

Level1_EventHandler

|

OnPostExecute

|

3

|

Package_Level2

|

Level1_EmptyControlFlowTask

|

OnPostExecute

|

4

|

Package_Level1

|

Package_Level1

|

OnPostExecute

|

5

|

Package_Level2

|

Level1_EventHandler

|

OnPostExecute

|

6

|

Package_Level2

|

Package_Level1

|

OnPostExecute

|

7

|

Package_Level2

|

Level2_ExecuteChildPackage

|

OnPostExecute

|

8

|

Package_Level2

|

Package_Level2

|

OnPostExecute

|

When we execute Package_Level4 we see a total of 52 events

fired, all triggered by the single Level1_EmptyControlFlowTask task in

Package_Level1.

Below is the formula for determining how many additional events

will be fired by the top-level package in a package hierarchy, per Control Flow

task when there is a single OnPostExecute event handler task in all packages in

the hierarchy.

y = 2^n + x

x = previous package level’s

additional events

n = level in package hierarchy

(starting at base package level 1)

y = total additional events fired

at level n

Package Level

|

Calculation

|

Additional Events Fired

|

Total Events Fired

|

1

|

2^1 + 0

|

2

|

2

|

2

|

2^2 + 2

|

6

|

8

|

3

|

2^3 + 6

|

14

|

22

|

4

|

2^4 + 14

|

30

|

52

|

5

|

2^5 + 30

|

62

|

114

|

As you can see, having nested packages with package level

event handlers can create quite a bit of overhead. I initially failed to see

this in my logs because my logging stored procedures were ignoring the event

handlers that were fired by other event handlers. However, the process still had

the overhead of all the events firing and the stored procedure calls, the data

just wasn’t being persisted to the database.

Event handler behavior can be very tricky. Don’t be like me,

understand what’s happening before implementing your event handler logic.

Thursday, July 19, 2012

Visual FoxPro Upsizing Wizard

If you're in the unfortunate position that I recently found myself in and need to move data from a Visual FoxPro (VFP for the die-hards) database to a SQL Server database there are a few gotchas you might run into including:

- The lack of a .dbc file required by the Visual FoxPro Upsizing Wizard.

- The error: The Upsizing Wizard could not set the SQL Server database to proper compatibility level for upsizing. In order to upsize, Visual FoxPro must set the compatibility level of the target SQL database to 6.5.

1. Creating a Visual FoxPro Database From Pre-existing .dbf Files

If you know anything about VFP then number 1 is probably a no brainer. When I started my project I knew absolutely nothing about VFP (and I still don't). So for those of you that are like me, it's very easy to create the .dbc file. Follow the steps below.

If you know anything about VFP then number 1 is probably a no brainer. When I started my project I knew absolutely nothing about VFP (and I still don't). So for those of you that are like me, it's very easy to create the .dbc file. Follow the steps below.

- Open VFP

- Click File -> New, select the Database radio button and then click the New File button.

- When presented with the Save File dialog choose the location for your .dbc file.

- Now click the Add Table button found in the Database Designer toolbox.

- When presented with the Open File dialog, navigate to the directory with your .dbf files and select all the files that you want to add to to your new database (.dbc file).

- Done. Now you have a database that the Upsizing Wizard can consume.

2. Resolving the 6.5 Compatibility Level Error

Most versions of SQL Server allow you to set the compatibility level of each database within an instance. This allows SQL Server instances of a higher version to host databases of a lower version. For example, SQL Server 2012 allows you to host databases at compatibility level 90, 100, and 110. These numbers are just a confusing way to refer to SQL Server 2005, 2008 (2008 R2 doesn't have it's own compatibility level), and 2012. So what in pray tell is compatibility level 6.5 (65)? It actually refers to SQL Server 6.5 (the one before 7.0 which is the one before 2000). Yeah, it's old.

Most versions of SQL Server allow you to set the compatibility level of each database within an instance. This allows SQL Server instances of a higher version to host databases of a lower version. For example, SQL Server 2012 allows you to host databases at compatibility level 90, 100, and 110. These numbers are just a confusing way to refer to SQL Server 2005, 2008 (2008 R2 doesn't have it's own compatibility level), and 2012. So what in pray tell is compatibility level 6.5 (65)? It actually refers to SQL Server 6.5 (the one before 7.0 which is the one before 2000). Yeah, it's old.

Anyway, the VFP Upsizing Wizard requires that the SQL Server that you're upsizing (importing) to be able to support compatibility level 65 (version 6.5). If you're running into this error you're most likely running SQL Server 2008 or above as these versions no longer support compatibility level 65 or 70 and 2012 no longer supports 80 (version 2000).

What is one to do? What I found was the easiest route was to download SQL Server 2005 Express Edition. It's free, it's a small download (~55mb), and it supports 6.5.

Once you've downloaded and installed SQL Server 2005 Express make sure you create your DSNs (the ones that you'll use in the Upsizing Wizard) using the SQL Server Native Client driver (not SQL Server Native Client 10.0 or 11.0 as these refer to 2008 and 2012).

When creating your DSNs keep in mind that if you're running a 64-bit operating system you must use the 32-bit ODBC Data Source Administrator. THIS DOES NOT MEAN TYPING ODBCAD32.EXE IN THE RUN PROMPT. In order to launch the appropriate administrator you must run it from a directory similar to C:\Windows\SysWOW64\odbcad32.exe.

At this point you should be all set to run the Upsizing Wizard. Good luck!

Monday, June 25, 2012

SQL Azure: Development Tools

There are number of tools available to build your SQL Azure database. In this post I will describe three of them including SSMS (SQL Server Management Studio), SSDT (SQL Server Data Tools), and the SQL Azure Database Manger.

1) SQL Server Management Studio

SQL Server Management Studio, a.k.a SSMS, and its predecessor Query Analyzer have been the tools of choice for SQL Server database developers that prefer a GUI over a command line. SQL Server 2008 R2 includes support for SQL Azure in SSMS. Naturally, the version of SSMS that ships with SQL Server 2012 also supports the management and development of SQL Azure databases.

While the SSMS 2012 user interface looks a bit different than previous versions because it is now hosted in the WPF-based Visual Studio shell, connecting to a database is the same as connecting from SSMS 2008 R2.

Once connected, the Object Explorer tree looks very similar to an on-premises SQL Server database, although you'll notice that the server icon looks a bit different. Additionally, some folders such as Server Objects and Replication as well as the SQL Server Agent node are missing as this functionality is not currently available on the SQL Azure platform. Refer to MSDN for a list of the SQL Server features not currently supported on the SQL Azure platform.

Now that we have provisioned a SQL Azure server instance as well as created a SQL Azure database, let's create a table. Just like a regular SQL Server database, it's as simple as expanding the database node in Object Explorer, right-clicking the Tables folder and selecting New Table.... For some reason Microsoft has chosen not to provide the familiar table designer user interface. Instead it displays a CREATE TABLE script template. Below is a screen shot of a modified template used to create a PolicyTransactionFact table in our InsuranceDW database. All we had to do was name the table and add columns and data types. Once you have created your table definition you can either hit the F5 key on your keyboard or click the Execute button. The screen shot below was taken after executing the CREATE TABLE script.

Creating other types of SQL Azure objects is just as easy and is extremely similar to creating objects in on on-premises SQL Server database.

2) SQL Server Data Tools

SQL Server Data Tools (SSDT) provides a declarative approach to database development that is more in line with Microsoft's Visual Studio-based application development paradigm. It is hosted within the Microsoft Visual Studio shell and is free for download on MSDN.

Microsoft would like SSMS to remain as an Administrative tool for SQL Server instances and databases but would like development work to be done in Visual Studio by way of SQL Server Data Tools projects. Additionally, starting with SQL Server 2012, SSDT replaces BIDS as the development environment for SSIS, SSAS, and SSRS.

The first step in SSDT-based database development is to create a SQL Server Database Project. Make sure you install SSDT prior to attempting to create this project type as it is not available with the default installation of SQL Server 2010.

Once you've created the SQL Server Database Project right-click the top-level project node in Solution Explorer and select Properties. Here is were we set the Target Platform to SQL Azure to make sure that our build output is compatible with the SQL Azure platform.

Once the project is created and the platform appropriately set we can begin to create database objects. Just as we did with SSMS, we'll create a table. As you can see below, SQL Server Database Projects provide a table designer so that you can create tables using a GUI as well as writing SQL.

Since SSDT is a declarative development environment we don't actually create physical objects on a server as we develop them in code. Once the developer is content with their development they must Publish the current SSDT representation of the database schema to the database server. This is done by right-clicking on the top-level database project node and selecting Publish.

At this point we are presented with a dialog requesting the publishing/deployment information.

This will take you to the Database Manager log on screen. Enter your credentials and log on. You should see the screen below.

Click the "Design" link in the lower left-hand corner of the screen.

Notice that we're in the Tables section and that we see a list of our tables. Click "New Table" to create a new table.

The Create Table screen should be self-explanatory. You can add/remove columns, set their data types, select primary key columns, set nullability, etc. When you're done, click "Save" and your new table will be added to the database.

Summary

As you've seen, anyone can create a SQL Azure database (whether you want to pay or not) and use any one of the three tools we discussed to create and maintain database objects. SQL Azure isn't right for everyone, but for some organizations and situations it can be a very powerful tool. Hopefully this post will at least convince you to get your feet wet in case you find yourself in one of these situations so that you will have had enough exposure to SQL Azure to know what to do next. Good luck!

1) SQL Server Management Studio

SQL Server Management Studio, a.k.a SSMS, and its predecessor Query Analyzer have been the tools of choice for SQL Server database developers that prefer a GUI over a command line. SQL Server 2008 R2 includes support for SQL Azure in SSMS. Naturally, the version of SSMS that ships with SQL Server 2012 also supports the management and development of SQL Azure databases.

While the SSMS 2012 user interface looks a bit different than previous versions because it is now hosted in the WPF-based Visual Studio shell, connecting to a database is the same as connecting from SSMS 2008 R2.

Once connected, the Object Explorer tree looks very similar to an on-premises SQL Server database, although you'll notice that the server icon looks a bit different. Additionally, some folders such as Server Objects and Replication as well as the SQL Server Agent node are missing as this functionality is not currently available on the SQL Azure platform. Refer to MSDN for a list of the SQL Server features not currently supported on the SQL Azure platform.

Now that we have provisioned a SQL Azure server instance as well as created a SQL Azure database, let's create a table. Just like a regular SQL Server database, it's as simple as expanding the database node in Object Explorer, right-clicking the Tables folder and selecting New Table.... For some reason Microsoft has chosen not to provide the familiar table designer user interface. Instead it displays a CREATE TABLE script template. Below is a screen shot of a modified template used to create a PolicyTransactionFact table in our InsuranceDW database. All we had to do was name the table and add columns and data types. Once you have created your table definition you can either hit the F5 key on your keyboard or click the Execute button. The screen shot below was taken after executing the CREATE TABLE script.

Creating other types of SQL Azure objects is just as easy and is extremely similar to creating objects in on on-premises SQL Server database.

2) SQL Server Data Tools

SQL Server Data Tools (SSDT) provides a declarative approach to database development that is more in line with Microsoft's Visual Studio-based application development paradigm. It is hosted within the Microsoft Visual Studio shell and is free for download on MSDN.

Microsoft would like SSMS to remain as an Administrative tool for SQL Server instances and databases but would like development work to be done in Visual Studio by way of SQL Server Data Tools projects. Additionally, starting with SQL Server 2012, SSDT replaces BIDS as the development environment for SSIS, SSAS, and SSRS.

The first step in SSDT-based database development is to create a SQL Server Database Project. Make sure you install SSDT prior to attempting to create this project type as it is not available with the default installation of SQL Server 2010.

Once you've created the SQL Server Database Project right-click the top-level project node in Solution Explorer and select Properties. Here is were we set the Target Platform to SQL Azure to make sure that our build output is compatible with the SQL Azure platform.

Once the project is created and the platform appropriately set we can begin to create database objects. Just as we did with SSMS, we'll create a table. As you can see below, SQL Server Database Projects provide a table designer so that you can create tables using a GUI as well as writing SQL.

Since SSDT is a declarative development environment we don't actually create physical objects on a server as we develop them in code. Once the developer is content with their development they must Publish the current SSDT representation of the database schema to the database server. This is done by right-clicking on the top-level database project node and selecting Publish.

At this point we are presented with a dialog requesting the publishing/deployment information.

Once the connection information and database name are entered hit the Publish button. The results of the publish can be seen in the Data Tools Operations window.

SSDT SQL Server Database Projects are extremely powerful and can play an integral role in DLM (database lifecycle management) and ALM (application lifecycle management) processes. I provide an argument for using SQL Server Database Projects' predecessor Visual Studio Database Project in my blog post titled Visual Studio 2010 Database Projects and also explain the evolution to SQL Server Database Projects in my blog post titled The Future of Visual Studio Database Projects.

3) SQL Azure Database Manager

The last database development tool I'll discuss is the web-based SQL Azure Database Manager. To use the Database Manager you'll need to log into the Azure Platform Portal and navigate to the Database section by clicking on the Database Link.

Now navigate the menu tree and select the appropriate database server.

Now click the "Manage" link.

Click the "Design" link in the lower left-hand corner of the screen.

Notice that we're in the Tables section and that we see a list of our tables. Click "New Table" to create a new table.

The Create Table screen should be self-explanatory. You can add/remove columns, set their data types, select primary key columns, set nullability, etc. When you're done, click "Save" and your new table will be added to the database.

Summary

As you've seen, anyone can create a SQL Azure database (whether you want to pay or not) and use any one of the three tools we discussed to create and maintain database objects. SQL Azure isn't right for everyone, but for some organizations and situations it can be a very powerful tool. Hopefully this post will at least convince you to get your feet wet in case you find yourself in one of these situations so that you will have had enough exposure to SQL Azure to know what to do next. Good luck!

Wednesday, June 20, 2012

SQL Azure: Building your first Microsoft database in the cloud

We’ve all been hearing about the cloud for a while and if

you’re exposed to Microsoft at all that means Azure. If you’re a database

person that means SQL Azure. So what’s it all about?

Without getting too wordy, it’s more or less a high-availability,

hosted version of Microsoft’s SQL Server database. Want most of the benefits of

SQL Server but don’t have the hardware or human resources to run it yourself?

SQL Azure might be right for you. Maybe you just want to do a quick

proof-of-concept or prototype but don’t want to take the time of standing up a

SQL Server. SQL Azure might be right for you.

I think I’m probably like a lot of developers in that new

technologies can be a little hard to grasp and sometimes even a little scary

until I get my hands on it and see it in action. The purpose of this post is to

show you how easy it is to create and use database in the cloud on Microsoft’s

Windows Azure platform.

Many developers probably don’t know that they get a free

subscription to the Azure platform with any Visual Studio Professional,

Premium, or Ultimate with MSDN license. Additionally, BizSpark program members

also get the same free subscription that is available with Visual Studio

Ultimate with MSDN. Details can be found here.

If you don’t fall into the MSDN or BizSpark camps and want to give Azure a test

drive but don’t want to cut into your budget there is also a free 90-day trial

available at the time of this post (http://www.microsoft.com/windowsazure/free-trial/).

Time to start playing. At this point I’ll assume you’ve

signed up, have a subscription and are logged in to the Windows Azure portal (http://windows.azure.com). You should see

the Getting Started with Windows Azure screen

below.

To begin the process of creating a database server and

database, click on the Database link

on the bottom left hand corner of the screen.

Now click on the Create

a new SQL Database Server link.

Choose your subscription. My is named POC for proof-of-concept.

Select the Region in

which you’d like your server hosted.

Now set a password.

You’ll now have to add a firewall rule to allow incoming traffic from the

machine(s) in which you’ll be managing this server.

Once you’ve completed the process of creating a server you’ll

come to a screen that provides all the details of your server. You’ll also see

that you already have one database, the master

database. The master database is

required and cannot be deleted. This database contains things such as

permissions and usage metrics.

Now that we have a server we can create a database. Click

the Create link in the ribbon-like

menu at the top of the screen. Provide a name for the new database and choose

an appropriate edition and maximum size. To be safe (in terms of billing)

choose a 1 GB Web Edition database.

Congrats! You’ve now successfully created your first Microsoft

database in the cloud.

Tuesday, June 19, 2012

SSIS Transactions Including Oracle Data Sources

Microsoft SQL Server Integration Services has the out-of-the box capability to handle transactions. I won't get into the details of how to use it as this post is specifically geared towards including an Oracle data source/Connection Manager in a transaction. For an overview of transactions in SSIS as well as enabling MSDTC to support this capability, please refer to the links below.

When using transactions with only SQL Server Connection Managers the typical gotcha for the uninitiated is the MSDTC requirement mentioned above. When using an Oracle-based Connection Manager there is an additional dependency. This dependency is the Oracle Services For Microsoft Transaction Server component and is available in the Oracle client installer. Below is a screen shot of the installation screen with this component highlighted.

Once MSDTC and the Oracle Services For Microsoft Transaction Server are installed and enabled, cross-database platform transactions are a breeze.

Wednesday, May 16, 2012

Script to Generate Foreign Key Column Indexes

While quality indexing often needs human intervention, not automated tools, there are some good baseline indexes that can be added to a database.

Most of the databases I design lately are star-schema databases with fact tables that have many foreign keys to dimension tables. Out of the gate I typically add basic non-clustered indexes to all of these foreign keys and adjust as necessary once the database begins to get significant use.

Any easy way to do this is to use the SQL Server system catalog tables to identify all non-indexed foreign key columns and then use that information to generate the CREATE INDEX statements. Below is a query that does just that.

SELECT

'CREATE INDEX [IX_' + TableName + '_' + ColumnName + '] ON [' + TableName + '] ( [' + ColumnName + '] );'

FROM (

SELECT

o.name AS TableName

,cols.name AS ColumnName

FROM

sys.foreign_key_columns fc

INNER JOIN sys.objects o

ON fc.parent_object_id = o.object_id

INNER JOIN sys.columns cols

ON cols.object_id = o.object_id

AND fc.parent_column_id = cols.column_id

EXCEPT

SELECT

o.name AS TableName

,cols.name AS ColumnName

FROM

sys.index_columns icols

INNER JOIN sys.objects o

ON icols.object_Id = o.object_id

INNER JOIN sys.columns cols

ON cols.object_id = o.object_id

AND icols.column_id = cols.column_id

INNER JOIN sys.schemas s

ON o.schema_id = s.schema_id

WHERE

s.name <> 'sys'

) t

ORDER BY

TableName

,ColumnName

SSIS: Access Network Resources on a Server Not on the Same Domain

I recently ran into a situation where my SSIS server needed access to file shares that were not on the domain but were accessible over the network via UNC path.

Typically I would create a domain-based service account and grant it access to any network resources required by the SSIS process. In this case the SSIS server needed to access files on servers on two other domains and these domains did not have a trust between them and the SSIS domain.

The solution was to dynamically create and delete the mapped drives as needed using Execute Process tasks and the NET USE command. Below is an example of this command.

NET USE Z: "\\someserver\someshare" /user:somedomain\someusername somepassword

Not the user parameter. This is where you can pass the credentials from the domain on which the share resides. If, for some reason, you want to use a mapped drive on the same domain (instead of a UNC path) you can exclude the username and password (assuming that the user under which your process is running already has access to the share).

We need to modify this a little bit to use in an Execute Process task. See below.

Note that the Executable property is set to cmd.exe. This is how we tell the task to run this command from a command prompt. Also not the /c switch. This tells the task not to display the command prompt window and to complete execution without any user intervention. If you wanted to debug the task you could replace the /c switch with /k which would display the command prompt window at the time of execution.

After this task runs our process now has the ability to use the newly mapped drive. This is where our Connection Manager comes into play. This could be any Connection Manager that requires network access such as FLATFILE, EXCEL, OLEDB Jet 4.0 for Access, etc. All we have to do is set the Connection Manager's ConnectionString property to a path using our mapped drive.

Typically I would create a domain-based service account and grant it access to any network resources required by the SSIS process. In this case the SSIS server needed to access files on servers on two other domains and these domains did not have a trust between them and the SSIS domain.

The solution was to dynamically create and delete the mapped drives as needed using Execute Process tasks and the NET USE command. Below is an example of this command.

NET USE Z: "\\someserver\someshare" /user:somedomain\someusername somepassword

Not the user parameter. This is where you can pass the credentials from the domain on which the share resides. If, for some reason, you want to use a mapped drive on the same domain (instead of a UNC path) you can exclude the username and password (assuming that the user under which your process is running already has access to the share).

We need to modify this a little bit to use in an Execute Process task. See below.

Note that the Executable property is set to cmd.exe. This is how we tell the task to run this command from a command prompt. Also not the /c switch. This tells the task not to display the command prompt window and to complete execution without any user intervention. If you wanted to debug the task you could replace the /c switch with /k which would display the command prompt window at the time of execution.

After this task runs our process now has the ability to use the newly mapped drive. This is where our Connection Manager comes into play. This could be any Connection Manager that requires network access such as FLATFILE, EXCEL, OLEDB Jet 4.0 for Access, etc. All we have to do is set the Connection Manager's ConnectionString property to a path using our mapped drive.

The key here is to set DelayValidation to True. If we don't do this then the package will fail validation each time because the mapped drive has not been created when SSIS attempts to validate the package. The drive is only mapped after validation is complete and the Execute Process task has run.

You may be thinking, why would validation fail each time? Isn't the mapped drive still there from the last time we ran the package? The answer is no. It's no because of the last step which is to delete the mapped drive once we are done using it. The command for deleting this drive would be the following.

NET USE Y: /delete

All we need here is the drive letter and the delete switch. Below is a screen shot of the Execute Process task.

That's all you need to access file shares with SSIS using dynamically created mapped drives. UNC paths are typically a more reliable method and what I consider to be a best practice when accessing a file system over a network. However, you might find yourself in a situation similar to mine where a mapped drive is necessary to pass credentials, other than the ones being used to run your process, to access a file system resource.

Friday, January 27, 2012

CLR Stored Proc with Output Value Returning NULL

I'm a little embarrassed to be writing a post about this but I figured there might be someone else out there making the same obvious mistake that might find this useful.

I recently created a CLR stored procedure that returns a value. Not a return code but an actual calculated output. To implement this in C# it is necessary to declare an output parameter in the method definition similar to the following.

I recently created a CLR stored procedure that returns a value. Not a return code but an actual calculated output. To implement this in C# it is necessary to declare an output parameter in the method definition similar to the following.

public static void CLRSum(SqlInt32 val1, SqlInt32 val2, out SqlInt32 value)

{

value = val1 + val2

}

As I alluded to in the title of this post, calls to my new CLR stored procedure where returning NULL. Below is how I was calling my CLR stored procedure from T-SQL.

DECLARE @value INTEXECUTE dbo.CLRSum 4, 3, @valueSELECT @ValueNotice anything missing? Took me a little while but I finally realized I was missing the output keyword. The correct way to call the stored proc is below.DECLARE @value INTEXECUTE dbo.CLRSum 4, 3, @value outputSELECT @Value

Wednesday, January 11, 2012

The specified program requires a newer version of Windows - SSDT Install

Look familiar?

Unfortunately SSDT is not supported on Windows XP. The following Windows versions are supported.

- Windows Vista SP2 or higher

- Windows Server 2008 SP2 or higher

- Windows 7 RTM or higher

- Windows Server 2008 R2 RTM or higher

Subscribe to:

Comments (Atom)